I-could-have-written-that

From Algolit

| Type: | Connected algoliterary work |

| Datasets: | custom textual sources, modality.py, Twitter API, DuckDuckGo API, Wikipedia API |

| Technique: | rule-based learning, supervised learning, unsupervised learning, bag-of-words, cosine_similarity |

| Collectively developed by: | The people behind Pattern, SciKit Learn, Python, Nltk, Jinja2 & Manetta Berends |

i-could-have-written-that* is a practice based research project about text based machine learning, questioning the readerly nature of the techniques and proposing to represent them as writing machines. The project includes the poster series from Myth (-1.00) to Power (+1.00) and three writing-systems (writing from Myth (-1.00) to Power (+1.00), Supervised writing & Cosine Similarity morphs) that translate technical elements from machine learning into graphic user interfaces in the browser.

The interfaces enable their users to explore the techniques and do a series of test-runs themselves with a textual data source of choice. After processing the textual source of choice, the writing-systems offer the option to export their outputs to a PDF document.

Contents

from Myth (-1.00) to Power (+1.00)

from myth (-1.00) to power (+1.00) is a poster series and linguistic mirror reflecting on the subject of certainty in text mining.

The series of statements are the product of a poetic translation excercise based on a script that is included in the text mining software package Pattern (University of Antwerp), called modality.py. This rule-based script is written to calculate the degree of certainty of a sentence, expressed as a value between -1.00 and +1.00.

Modality.py is a rule-based program, one of the older types of text mining techniques. The series of calculations in a rule-based program are determined by a set of rules, written after a long intensive period of linguistic research on a specific subject. A rule-based program is very precise, effective, but also very static and specific, which makes them an expensive type of text-mining technique, in terms of time, labour, and the difficulty to re-use a program on different types of text.

To overcome these expenses, rule-based programs have been massively replaced these days by pattern recognition techniques such as supervised learning and neural networks, where the rules of a program are based on patterns in large datasets. Modality.py

The program on which these posters are based, called Modality.py, is written to calculate a degree of certainty in academic papers, and express this degree in a value between -1.00 & +1.00. The sources used for modality.py are academic papers from a dataset called 'BioScope' and Wikipedia training data from CoNLL2010 Shared Task 12. Part of this dataset are 'weasel words'3, words that are annotated as 'vague' by the Wikipedia community. Examples of weasel words are: some people say, many scholars state, it is believed/regarded, scientists claim, it is often said4.

The script modality.py is an example of a rule-based program, full with pre-defined values. The words fact (+1.00), evidence (+0.75) and (even) data (+0.75) indicate a high level of certainty. As opposed to words like fiction (-1.00), and belief (-0.25).

In the script, the concept of being certain is divided up in 9 categories:

-1.00 = NEGATIVE

-0.75 = NEGATIVE, with slight doubts

-0.50 = NEGATIVE, with doubts

-0.25 = NEUTRAL, slightly negative

+0.00 = NEUTRAL

+0.25 = NEUTRAL, slightly positive

+0.50 = POSITIVE, with doubts

+0.75 = POSITIVE, with slight doubts

+1.00 = POSITIVE

after which a set of words is connected to each category, for example this set of nouns:

-1.00: d("fantasy", "fiction", "lie", "myth", "nonsense"),

-0.75: d("controversy"),

-0.50: d("criticism", "debate", "doubt"),

-0.25: d("belief", "chance", "faith", "luck", "perception", "speculation"),

0.00: d("challenge", "guess", "feeling", "hunch", "opinion", "possibility", "question"),

+0.25: d("assumption", "expectation", "hypothesis", "notion", "others", "team"),

+0.50: d("example", "proces", "theory"),

+0.75: d("conclusion", "data", "evidence", "majority", "proof", "symptom", "symptoms"),

+1.00: d("fact", "truth", "power")

A poetic translation exercise, from an interest in a numerical perception of human language, while bending strict categories.

writing from Myth (-1.00) to Power (+1.00)

The writing-system writing from Myth (-1.00) to Power (+1.00) is based on the certainty-detection script modality.py, where also the poster series are based on. The interface is a rule-based reading tool, that highlights the effect of rules that are written by the scientists at the University of Antwerp. The interface also offers the option to change the rules and create a custom reading-rule-set applied to a text of choice.

The default framework of rules in this writing-system are coming from modality.py. The rules are extracted from the Bioscope dataset and Wikipedia articles provided by the CoNLL2010 Shared Task 1, combined with weasel words (words that are tagged by the Wikipedia community as terms that increase the vaguenes of a text) and other words that seemed to make sense.

Supervised writing

The writing-system Supervised writing is built with a set of techniques that are often used in a supervised machine learning project. In a series of steps, the user guided through a language processing system to create a custom counted vocabulary writing exercise. On the way, the user meets the bag-of-words counting principle by exploring its numerical view on human language. With the option to work with text material from three external input sources, Twitter or DuckDuckGo or Wikipedia, this writing-system offers an alternative numerical view to well-known sources of textual data.

Cosine Similarity morphs

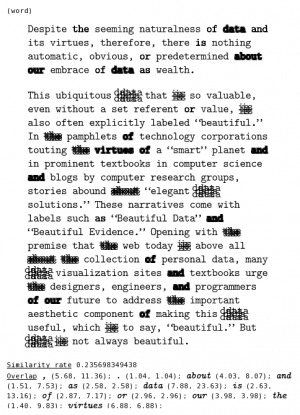

The writing-system Cosine Similarity morphs works with unsupervised similarity measurements on sentence level. The textual source of choice is first transformed into a corpus and a vector matrix, after which the cosine similarity function from SciKit Learn is applied. The cosine similarity function is often used in unsupervised machine learning practises to extract hidden semantic information from text. Since the textual data is shown to the computer without any label, this technique is often refered to as unsupervised learning.

The interface offers the user to select from a set of possible counting methods, also called features, to create a spectra of four most similar sentences. While creating multiplicity as result, the interface includes numerical information on the similarity calculations that have been made. The user, the cosine similarity function, the author of the text of choice, and the maker of this writing-system, collectively create a quartet of sentences that morph between linguistic and numerical understanding of similarity.

Colophon

i-could-have-written-that is a project by Manetta Berends and is kindly supported by CBK Rotterdam. The code and output documents are licensed under the Free Art License.

* The title 'i-could-have-written-that' is derived from the paper ELIZA--A Computer Program For the Study of Natural Language Communication Between Man and Machine, written by Joseph Weizenbaum and published in 1966.